Using Grounded Theory to Avoid Research Misconduct in Management Science

Isabelle Walsh, Neoma Business School

Abstract

In this article, I show that several of the most common forms of research misconduct in quantitative research in management science could be avoided if researchers made open, comprehensive use of the well-established Grounded Theory paradigm when using quantitative data. Investigating various mainstream management research outlets, I found that this is scarcely ever the case. I propose some viable alternatives for the design of quantitative and mixed studies in management science. If these alternatives are used, researchers could follow the main basic assumptions that lie at the roots of Grounded Theory, and make sure these assumptions are clearly stated in order to avoid being pushed toward episodes of misconduct that have become common in the field of management science.

Keywords: research misconduct; quantitative and mixed studies; GT paradigm

Introduction

In 2010, Bedeian, Taylor, and Miller investigated questionable research conduct through a survey of 448 faculty respondents. They grouped possible forms of research misconduct into three broad categories, the first being considered the most serious. These were: fabrication, falsification, and plagiarism; questionable research practices; and other misconduct. Within the first category, I am specifically concerned in the present article with those studies that withhold methodological details/results, and those that select only those data that support a hypothesis while withholding the rest. Bedeian et al. (2010) described this practice as “cooking data” (p. 718). Within the second category, of research misconduct, I focus on those studies that develop hypotheses after results are known; this practice is known as “HARKing” (Hypothesizing After the Results are Known: Kerr, 1998; Garst, Kerr, Harris, & Sheppard, 2002).

This article argues that one way to help solve important research misconduct issues in quantitative management research might be to revisit grounded theory (GT: Glaser & Strauss, 1967). This could be as a research paradigm applied in mixed-method studies; thus may avoid making claims and conjectures after quantitative data yield surprising results.

This article is organized as follows: I first summarize some common forms of research misconduct in this field, which leads to an established type of design for quantitative research published in mainstream journals. I then propose some alternative designs for quantitative studies in management science. I investigate the literature for published studies that included quantitative and qualitative data and methods in a GT approach, and show that mixed-method GT research is scarcely present in top-tier research outlets in management science. I conclude by encouraging management researchers to apply what is already “ancient history” in other fields of research.

Cooking Data and HARKing: Two Important Issues in Quantitative Studies in Management Science

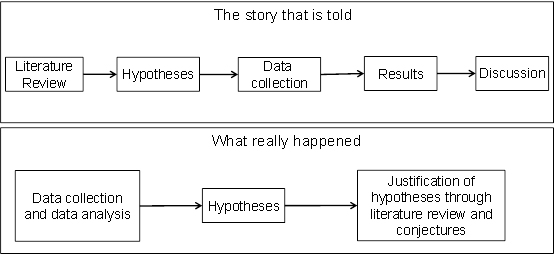

80 percent of Bedeian et al.’s (2010) respondents reported witnessing “cooking data” (p. 718) and 90 percent “HARKing” (Garst et al., 2002; Kerr, 1998). The article reported that hypothesizing after the results are known was often expected, with junior researchers being instructed to “comb through correlation matrices and circle the significant ones” and to examine “all possible interactions or moderators” (p. 719). This is not reprehensible in itself, if it were openly reported as such. However, it is scarcely the case, as most quantitative research takes a hypothetical deductive stance. Presentations of most so-called quantitative positivist studies published in the mainstream management literature start with a literature review that leads to hypotheses, which are subsequently tested. Unexpected results are explained by “conjectures” (Glaser, 2008). This linear design is quite acceptable if it relates an empirical research study accurately and truthfully, to the way it actually happened. However, Bedeian et al.’s (2010) results show that this is rarely the case, and that hypothesizing often occurs after the data have been collected and results from statistical analyses have been obtained. This can lead to the so-called Texas sharpshooter bias (Gawande, 1999; Thomson, 2009): the fabled “Texas sharpshooter” fires a shotgun at a barn and then paints the target around the most significant cluster of bullet holes in the wall. Accordingly, the Texas sharpshooter fallacy “describes a false conclusion that occurs whenever ex post explanations are presented to interpret a random cluster in some data” (Biemann, 2012, p. 2).

As an illustration of this linear process of traditional quantitative studies published in management, see figure 1.

Figure 1. Traditional design of quantitative research “suffering” from Texas sharpshooter bias.

How, then, can quantitative research avoid such research misconduct? What alternative research methodology can we propose to avoid such misconduct? Before we answer these questions, I first address (in the next section) the difference between the terms “mixed method” and “multi-method”.

Mixed-Method and Multi-Method Research

It is necessary to distinguish between the terms “mixed-method” and “multi-method” as these terms are both used and understood differently in the literature. This article follows Morse’s (2003) differentiation between these two terms.

I understand a multi-method design as the use in one single project of different research methods that are complete in themselves. For example, in a research project, one may choose to conduct interviews and collect qualitative data using a GT approach, and use the resulting emerging theory to develop and lay down hypotheses. Then the researcher may collect quantitative data and verify the hypotheses through statistical methods using a hypothetical deductive stance.

I understand a mixed-method design (the design that interests us in the present work) as including different quantitative and qualitative methods to supplement each other within a single project. For example, one may collect qualitative and quantitative data and analyze these through various qualitative and quantitative methods. Neither qualitative nor quantitative data/methods are sufficient in themselves for theorization or verification; both are necessary. Such a project may be qualitatively or quantitatively driven, depending on the “core” method. The other (“imported”) methods serve to enlighten and are a supplement to the “core” method. The theoretical drive may be inductive overall; with description, discovery, and/or exploration as purposes of the research (as is the case for GT studies); qualitative methods will more often be the core methods used in this case. Alternatively, the drive may be deductive if confirmation is the purpose; in this case, quantitative methods are more often the core methods used. Thus, there are different types of mixed-method studies, and one can refer to Morse (2003) – and, more generally, to Tashakkori and Teddlie’s (2003) excellent handbook – for an extensive review.

In the case of multi-method studies, “the need is small and the yield not great … Quantitative research when used for verification … is just not worth it” (Glaser, 2008, p. 12). Quantitative data should be used as “more data to compare conceptually, generate new properties of the theory, and thereby raise the level of plausibility of the theory. In short a quantitative test is really just more data for modification” (Ibid.). This leads us to mixed-method rather than multi-method studies in order to propose alternative designs for quantitative studies.

Alternative Designs for Quantitative Studies in Management Science

Much more than a method or a methodology, GT may be considered as a research paradigm (Glaser, 2005) that could be extremely useful in a ‘young’ and ‘soft’ science such as management. It is a paradigm, in the sense given to this word by Klee (1997): a model to be imitated, adapted, and extended “that defines practice for a community of researchers” (p. 135). It allows researchers to discover what is happening in a substantive domain and to structure reality. This paradigm is not limited by a given epistemology or ontology: it is “open” to any epistemology and ontology that may be espoused by researchers. It relies on collected data and the exploratory approach applied with the various techniques chosen by the researchers to analyze their data. Even though GT has been used mostly with qualitative data, it is an inductive research paradigm that can be used with any data, in any way, and in any combination. This has been stated by Glaser a number of times (Glaser, 2008). Most importantly, this paradigm should allow quantitative researchers to ‘tell the truth’ and relate their inductive research as it actually happened.

When conducting quantitative research, and if you wish to take an inductive approach grounded in data, I would propose being clear about this and relating your research the way it happened. This would mean not necessarily following established publishing canons, however tempting this might be. You might even aim to help establish new publishing canons.

If the literature is not sufficient to allow you to lay down hypotheses and/or if you feel urged to test unexplored possibilities, you should be clear about this (see Alternative design 1, figure 2) rather than pretend otherwise (see The story that is told, figure 1). When unexpected results occur, you could pursue data collection through a qualitative approach, in the hope of making sense of your data and emerging results (see Alternative design 2, figure 2).

Furthermore, even if hypotheses relying on the literature are verified, this should not stop you from being compelled to explore other possible paths further. By so doing, and if you remain in an exploratory grounded stance, you might uncover essential theoretical elements which were previously unrevealed.

The next section investigates the literature from some mainstream research outlets in management science to search for studies that might do this and use mixed-methods in a GT approach.

Figure 2. Some possible grounded designs to avoid research misconduct

Note: The double arrows are non-functionalist and aim to represent the continuous comparative analysis of data in a GT study.

Investigation of the Mainstream Management Literature for Mixed-Method GT Studies

I investigated the literature for empirical mixed and multi-method studies that used qualitative and quantitative data and methods, with a GT approach. My preliminary search was done for both mixed-method and multi-method studies – as there does not appear to be a full consensus on the definition of these terms in the literature. For my preliminary search, I used POP4 (Publish or Perish) software (Harzing, 2007), which uses Google Scholar as the database. I searched for the following terms anywhere in the text/references of articles: “grounded theory” + (“mixed method” or “mixed-method” or “multi-method” or “multi method” or “multi-method”) + “quantitative” + “qualitative”. I investigated articles published in four top-tier management science outlets: the Academy of Management Journal, Academy of Management Review, Administrative Science Quarterly, and Organization Studies.

Of the 14 articles that my preliminary search yielded, only three possibly fitted my purpose (Grant, Dutton, & Rosso, 2008; O’Mahony & Ferraro, 2007; Schall, 1983). I further investigated and analyzed these three articles.

Both Grant et al. (2008) and Schall (1983) barely mention GT en passant as a simple reference: Grant et al. mention only their “emerging theoretical understanding” (p. 902) and Schall mentions that “no predetermined categories were used” (p. 567). They certainly do not stress the emergence of theory, the constant comparison between all sets of data, or theoretical sampling. Of the three identified texts, only one (Schall, 1983) uses mixed-methods. The other two (Grant et al., 2008; O’Mahony & Ferraro, 2007) use a multi-method approach; they adopt a GT approach to develop hypotheses that they subsequently test with quantitative data and methods. Thus, they divide their work into two sections: an inductive, exploratory, GT-building section with qualitative data; and then a confirmatory, deductive approach with quantitative data to test the predictions resulting from the first part of the article. For instance, Grant et al. (2008) utilize quantitative survey data to verify the sense-making mechanisms highlighted with their qualitative data.

It is particularly striking that the only one of the three studies that in any way approached what I was searching for dates from as far back as 1983. It tends to confirm that, although the importance of exploratory quantitative analysis is a long-established concern (see, for instance, Benzécri, 1973; or Tukey, 1979), in more recent years, quantitative research in management science have implicitly adopted the hypothetical deductive stance of hard sciences, despite this leading to widespread research misconduct and being ill-adapted for theoretical development. This is most probably induced by management science’s quest for legitimacy which drives this soft science to try to imitate the methods of ‘hard’ sciences even though these methods might be inappropriate (De Vaujany, Walsh, & Mitev, 2011).

Conclusion

In the present article, I have shown that in management science we have at our disposal instruments and an established paradigm (Glaser, 2005), that could help researchers respect mandatory deontological precepts while conducting their research (in particular that involving quantitative data). These instruments are, however, little used in management research, even though they might yield valuable theoretical results. Inductive, exploratory, grounded quantitative research is not new. It has been established for many years in other fields. In the mid-1960s, at the time when Glaser defended his dissertation (which used quantitative data), people such as Lazarsfeld (who subsequently published inductive quantitative studies himself e.g., Lazarsfeld, Thielens, and Riesman, 1977) or Havemann and West (1952) were encouraging the use of quantitative data in inductive research. It is perhaps timely for researchers in management science to use GT more comprehensively. While doing so, it is essential for false methodological claims to be eliminated, to avoid the second form of mislabeling associated with GT, and highlighted by Birks, Fernandez, Levina, and Nasirin (2013), that of omission (in which GT is used but not reported as such). When conducting quantitative research, I propose that researchers follow the basic assumptions that lie at the roots of GT, and make sure these assumptions are explicitly stated. This will prevent researchers being pushed towards episodes of misconduct that have become common in the field of management science.

References

Bedeian, A., Taylor, S., & Miller, A. (2010). Management science on the credibility bubble: Cardinal sins and various misdemeanors. Academy of Management Learning and Education, 9(4), 715–725.

Benzécri, J., (1973). La place de l’a priori. Encyclopédia Universalis, Vol 17, Organum, 11–24.

Biemann, T. (2012). What if we are Texas Sharpshooters? A New Look at Publication Bias. Academy of Management Conference, Boston.

Birks, D., Fernandez, W., Levina, N., & Nasirin, S. (2013). Grounded theory method in information systems research: its nature, diversity and opportunities. European Journal of Information Systems, 22(1):1–8.

De Vaujany, F-X., Walsh, I., & Mitev, N. (2011). An historically grounded critical analysis of research articles in IS. European Journal of Information Systems, 20, 395-417. doi:10.1057/ejis.2011.13.

Garst, J., Kerr, N., Harris, S. & Sheppard, L. (2002). Satisficing in hypothesis generation. The American Journal of Psychology, 115(4), 475–500.

Gawande, A. (1999). The cancer-cluster myth. The New Yorker, 9, 34–37.

Glaser, B. & Strauss, A. (1967). The discovery of Grounded Theory: Strategies for Qualitative Research. New York: Aldine de Gruyter.

Glaser, B. (2008). Qualitative and Quantitative Research. The Grounded Theory Review, 7(2), 1–17.

Glaser, B. (2005). The Grounded Theory perspective III: Theoretical Coding. Mill Valley, CA: Sociology Press.

Grant, A., Dutton, J., & Rosso, B. (2008). Giving Commitment: Employee Support Programs and the Prosocial Sensemaking Process. Academy of Management Journal, 51(5), 898–918.

Harzing, A. (2007). Reflections on the h-index. Retrieved from http://www.harzing.com/pop_index.htm.

Havemann, E., & West, P. S. (1952). They Went to College: The College Graduate in America Today. New York: Harcourt, Brace.

Kerr, N. (1998). HARKing: Hypothesizing after the results are known. Personality and Social Psychology Review, 2(3), 196–217.

Klee, R. (1997). Introduction to the philosophy of science: cutting nature at its seams. Oxford: Oxford University Press.

Lazarsfeld, P. F., Thielens, W., & Riesman, D. (1977). The Academic Mind: Social Scientists in a Time of Crisis. New York: Arno Press.

Morse, J. (2003). Principles of Mixed Methods and Multimethod Research design. In Tashakkori A., & Teddlie, C. Handbook of Mixed Methods in social and behavioral research), (pp. 189–208). Thousand Oaks: Sage Publications Ltd.

O’Mahony, S., and Ferraro, F. (2007). The Emergence of Governance in an Open Source Community. Academy of Management Journal, 50(5), 1079–1106.

Schall, M. (1983). A Communication-Rules Approach to Organizational Culture. Administrative Science Quarterly, 28(4), 557–581.

Tashakkori A., & Teddlie, C. (2003). Quality of inferences in mixed methods research: Calling for an integrative framework. In M. M. Bergman (Ed.), Advances in mixed methods research: Theories and Applications (pp. 101–119). London: Sage Publications Ltd.

Thomson, W. (2009). Painting the target around the matching profile: the Texas sharpshooter fallacy in forensic DNA interpretation. Law, Probability and Risk, 8(3), 257–276.

Tukey, J. (1979). Methodology, and the statistician’s responsibility for BOTH accuracy AND relevance. Journal of the American Statistical Association, 74(368), 786–793.